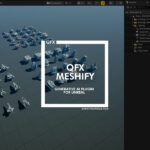

I recently built a Stable Diffusion plugin for Unreal Engine that lets you render scenes directly inside the viewport.

The tool captures the depth map of the scene and feeds it into Stable Diffusion to generate a new image effectively blending real-time 3D rendering with it.

I first put this tool to use with a real estate company to generate high-quality interior visuals. By training a custom Stable Diffusion model on a curated set of the company’s own interior photos, the plugin was able to produce renders that matched their specific design style and branding. This approach allowed for rapid iteration and stylistic consistency, transforming Unreal Engine viewports into a powerful concept visualization tool for architectural and interior design.

TRAINED MODEL

I also experimented with a more personal project, training a Stable Diffusion model using photos of my friend Alexandre W. (with his full consent).

Using a mannequin character in the Unreal viewport, I was able to place him in a variety of imagined scenarios and generate AI-enhanced renders. This workflow allowed me to explore creative scene composition while combining Unreal’s real-time capabilities with the flexibility of a custom-trained diffusion model.

Equirectangular Render

I’ve just added a new feature to the plugin. You can now render 360° panoramic shots of your current scene as equirectangular maps.

This means you can step right inside your AI-generated environments and freely look around, giving you a fully immersive view of your creation. It’s a great way to explore lighting, composition, and mood from every angle.

Next: I’ll be diving into Gaussian Splatting to turn these renders into fully textured 3D scenes.

Leave a Reply