UPDATE APRIL 12, 2021

Reviewed WebGL and blog pages. Standalone version is now running under Unity 2021 and should be ready for release quite soon.

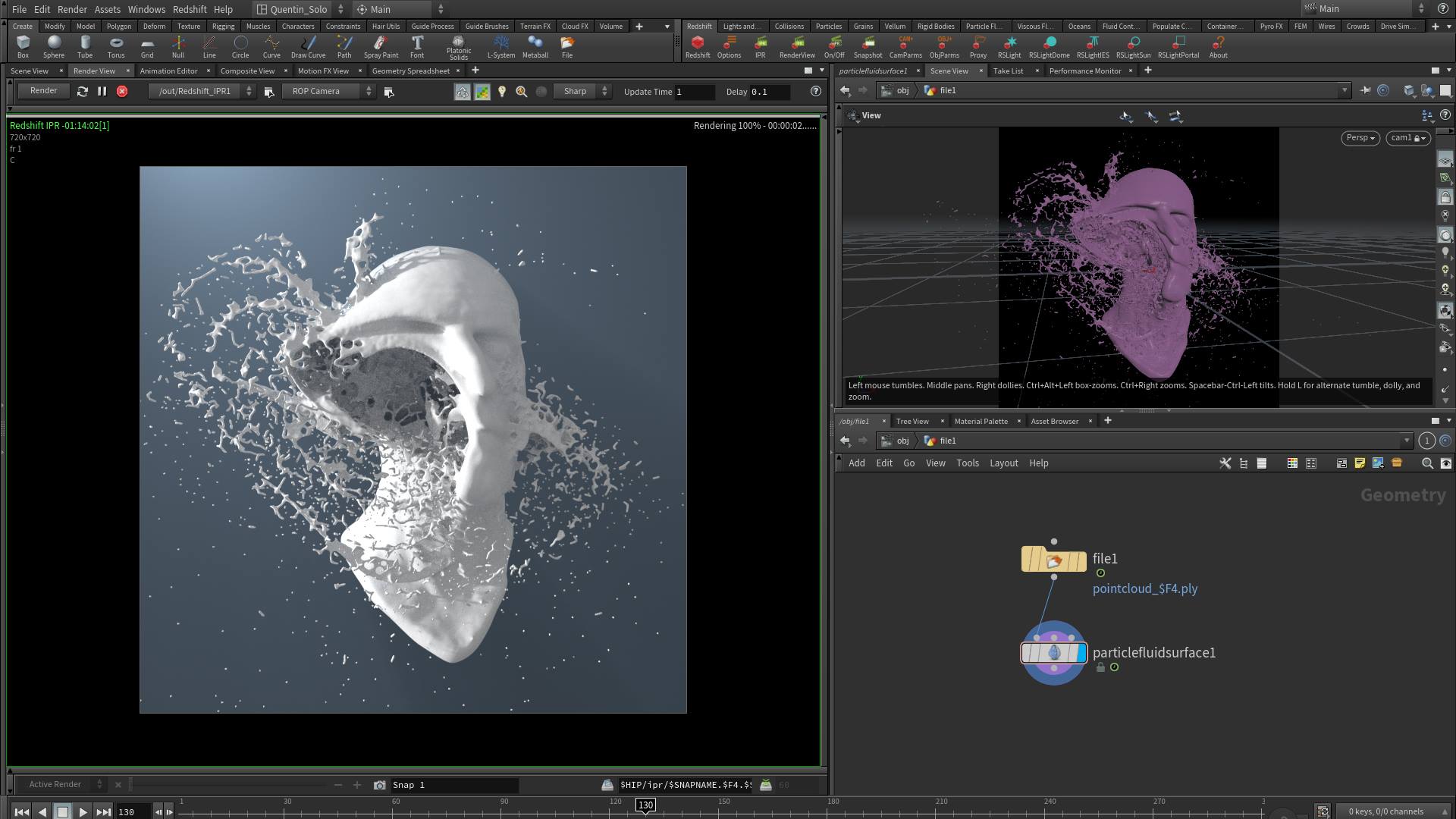

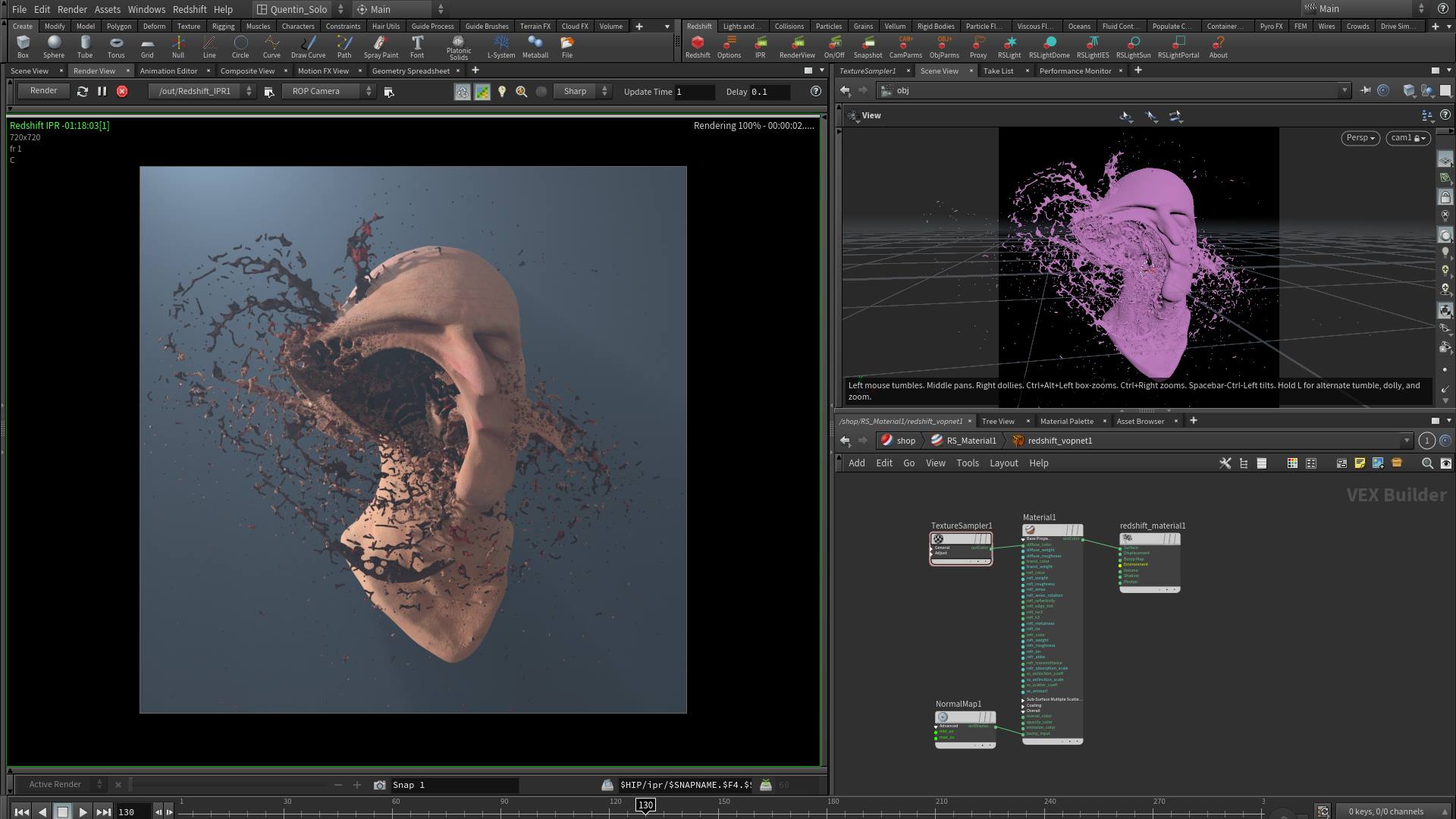

PCSB can now export interesting point clouds into Houdini (or other softwares) to do some nice renderings.

You can test the limited version of the export feature by yourself, right here (one frame only):

Why a Desktop version?

I wanted to be able to export PLY sequences at high framerate to get it animated in Houdini.

I managed to do it from the browser but I quickly run into memory problems and browser limitations. I was only able to get short sequences with a very low framerate.

That’s why I decided to port all this native OpenGL 2.x code to Unity and take profit from the power of DirectX11 and compute shaders. I still have a lot to do but I can now record very large sequences with a decent framerate… and export it very quickly on the hard drive.

Various Experiments:

Guys! Me and my point cloud shit again 😅 I found a way to export PLY sequences. This small webapp starts to become really interesting. Here is a bunch of these exports into #houdini, rendered with #redshift, as always. #webgl #threejs #pointcloud #indiedev #3d #vfx #cgi pic.twitter.com/bLtReTM45x

— Q. (@qornflex) March 29, 2019

Forbidden Research 02, another step in weirdness rendering using PCS, Houdini and Redshift. I’m very sorry for sensible souls 😞 #houdini #redshift #webgl #pointcloud #indiedev #3d #digitalart #vfx #cgi #gore pic.twitter.com/SwW4OBtgOB

— Q. (@qornflex) April 1, 2019

Forbidden Research 03 – Still working on PLY sequence export from PointCloud Sandbox #houdini #redshift #webgl #pointcloud #indiedev #3d #digitalart #vfx #cgi #gore pic.twitter.com/A4UYaM0tHR

— Q. (@qornflex) April 10, 2019

UPDATE JUNE 16, 2017

Finally released: pcsb.quentinlengele.com

UPDATE NOVEMBER 08, 2016

I got some time to optimize my FBO engine and expose a few parameters to UI controls.

The user can now upload a OBJ or PLY file, apply tessellation iterations to add more vertices to the cloud, play with it by using a brush tool, take snapshots or freeze the velocities and gravity, … I think it’s almost ready for a first public release.

And here is my Simulation Fragment Shader:

#extension GL_EXT_draw_buffers : require

#extension GL_EXT_frag_depth : enable

precision highp float;

uniform sampler2D originTexture;

uniform sampler2D posTexture;

uniform sampler2D velTexture;

uniform sampler2D propertyTexture;

uniform float dt;

uniform float time;

uniform mat4 uMVMatrix;

uniform mat4 uPMatrix;

uniform float meshScale;

uniform vec3 mousePosition;

uniform vec3 mouseVelocity;

uniform float noiseState;

uniform vec3 noiseFBM;

uniform vec3 noiseSeed;

uniform float noiseScale;

uniform float forceState;

uniform float brushRadius;

uniform float brushGravityScale;

uniform float brushForceScale;

uniform float gravityScale;

uniform float maxAge;

varying vec2 vuv;

#include noise.glinc

void main(void)

{

vec4 o = vec4(0.0, 0.0, 0.0, 1.0);

vec4 pos = vec4(0.0, 0.0, 0.0, 1.0);

vec4 vel = vec4(0.0, 0.0, 0.0, 1.0);

vec4 prop = texture2D( propertyTexture, vuv );

if (prop.a == 1.0) // check if particle is active

{

o = texture2D( originTexture, vuv ) * meshScale;

pos = texture2D( posTexture, vuv );

vel = texture2D( velTexture, vuv );

vec3 dirToOrigin = o.xyz - pos.xyz;

float distToOrigin = length(dirToOrigin);

vec3 dirToMouse = pos.xyz - mousePosition;

float distToMouse = length(dirToMouse);

vec3 dir2m = normalize(dirToMouse);

float diffm = max(distToMouse / (brushRadius * 0.1), 3.0);

float diffm2 = max(distToMouse / (brushRadius * 0.1), 3.0);

vec3 brushMoveForce = (mouseVelocity * brushForceScale) / (diffm*diffm);

vec3 brushGravity = vec3(0, 0, 0);

// handle force according mouse pos ------------------------------------------------------

brushGravity = (dirToMouse / (diffm2*diffm2)) * brushGravityScale;

// handle age -------------------------------------------------------------------------

if (forceState > 0.0)

{

vel.xyz += brushMoveForce - brushGravity;

}

// handle noise -------------------------------------------------------------------------

if (noiseState == 1.0)

{

applyPerlin(noiseFBM, pos.xyz, vel.xyz, noiseSeed, distToOrigin * (noiseScale * 0.01));

}

// handle gravity ------------------------------------------------------------------------

vel.xyz = mix(vel.xyz, dirToOrigin * gravityScale, dt);

// apply velocity to position ------------------------------------------------------------

pos.xyz += vel.xyz * dt;

// proximity -----------------------------------------------------------------------------

prop.r = clamp((1.0 / (distToMouse * 0.5)) * 50.0, 1.0, 50.0);

}

gl_FragData[0] = pos;

gl_FragData[1] = vel;

gl_FragData[2] = prop;

}

UPDATE JULY 21, 2016

At last, my native WebGL particles now support (classic) shadow maps and phong lighting is fully integrated with ThreeJS pipeline according a point sprites technique with generated depth.

Another Live Demo is coming but I need to provide some additional parameters and propose a fresh GUI… but here is another preview:

FIRST RESEARCH MAY 4, 2016

I wrote Particle Systems in many different environments. This time, I port my experiences on a web page through WebGL and Javascript.

To start with this, I looked for examples and I found quite a lot with different approaches but I was not happy with the functionalities and the structure of source code. That’s why I wrote a new FBO oriented system from scratch to get access to the GL_DRAW_BUFFER extension in a proper way. With this GL extension I’m able to output in more than one texture from the fragment shader and handle more data in a GP-GPU way, with position maps, velocity maps, extra-parameter maps, …

To illustrate this approach, I created a model of myself using a Kinect 2 device to get a PLY file with encoded vertex colors. Then I use ThreeJS to load and parse the PLY file. I create data textures to store 32bit values of these vertex positions, velocities, colors and other parameters like distances from the mouse, sizes, … To process these data, I use an old texture swapping technique as input/output of my framebuffer because WebGL (OpenGL ES 2.0) has no computation feature like OpenCL or DirectCompute (more here).

I also made a complete integration of this FBO particle system with the ThreeJS lighting pipeline.

The particles can be rendered as spherical billboards with generated normals and depth. Later, I’ll try to get a Lagrangian render of these particles (like the one I made in unity, here).

Now, I’m working on PSM (Particle Shadow Map) (more here).

The source code is not fully cleaned yet but I’ll try to push a live demo online soon.

Here is a short video preview running in Google Chrome.

4 comments

Join the conversationJean Claude Robert - May 5, 2016

Super Nice as usual ! And Nils Frahm for the bgr-music perfect match 🙂

jnt - July 29, 2016

“Mindblowing!”

Beats Away - January 8, 2017

you are amazing. your work of particle visualization+customization is the best on youtube. i.e. the world. Looking forward to learn more about your fbo library!

NIN - March 9, 2023

It’s always in Unity :/

Nice work though